Monitoring and Observability

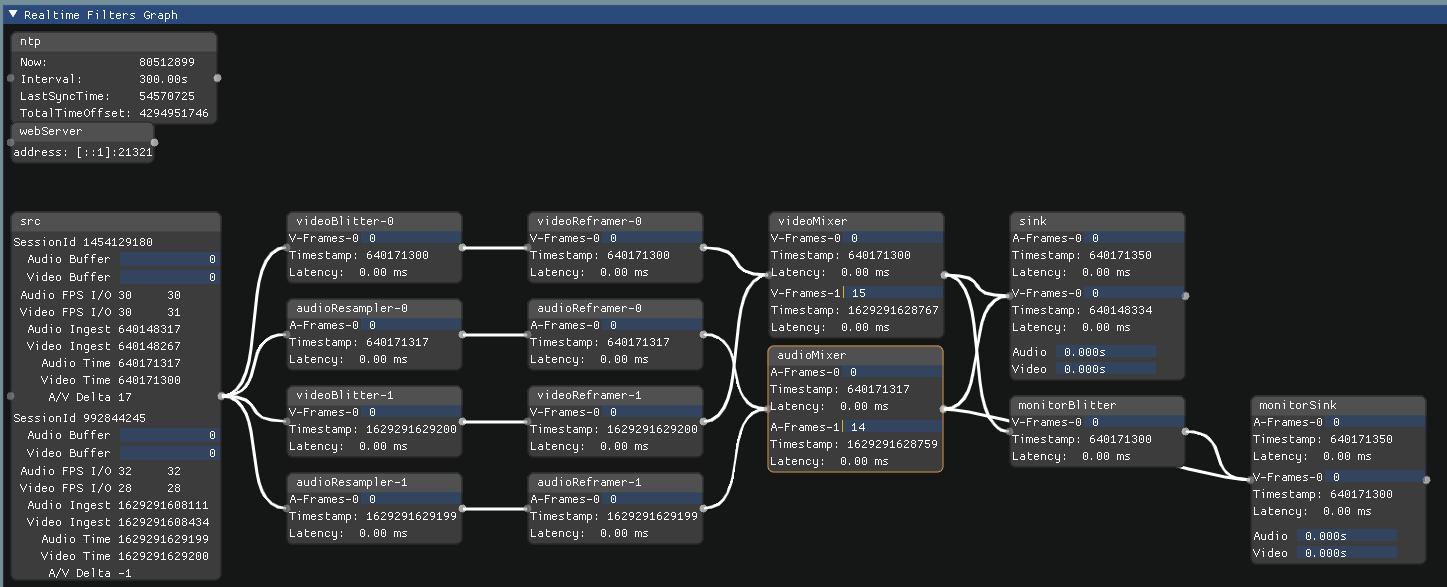

RemoteGUI

The RemoteGUI is here to provide live, visual, and interactive information about your program. It is currently supported by GVEncode, Composed, and the SDK. For now, the user interaction is limited to closing and moving information rectangles, as well as toggling some config settings.

Encoding

The encoding service interface exposes feedback from various filters, including information such as their respective time, latency, and number of frames.

Input Buffers

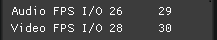

The Audio Buffer and Video Buffer fields in the source-filter (src) node display information about the audio and video buffers internal to the filter.

Those fields represent the current number of frames contained in the input buffers. The buffers should normally contain at most one or two frames. Any accumulation of frames beyond that indicates the filter is receiving frames faster than it is able to output to the next filter.

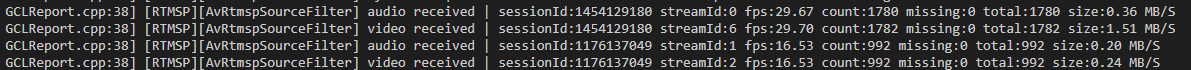

More information about the input frames can be found in the GVEncode logs. It is provided by the Genvid Connection Library as shown in the picture below.

These logs are continuously updated with information about how many frames have been received, which sessions are they associated with, and which streams they go through (in the source filter case it could be audio and video). You will also find information about the frame reception rates, expressed in frames per second and in megabytes per second.

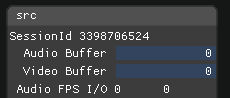

Framerate Indicators

The Audio FPS I/O and Video FPS I/O fields in the source-buffer node display the current reception and output framerates.

The left column shows the audio and video reception framerates. The right column displays the rate at which frames are pushed to the next filter.

In addition, the input framerates can also be monitored through the GVEncode logs. The logs are provided by the Genvid Connection Library as shown in the picture below.

These logs are continuously updated with information about how many frames have been received, which sessions are they associated with, which streams they go through (in the source filter case it could be audio and video). You will also find information about the frame reception rates, expressed in frames per second and in megabytes per second.

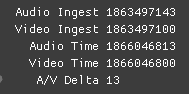

Timing Indicators

The Audio Ingest, Video Ingest, Audio Time, and Video Time fields in the source node present timing information for input frames.

The fields Audio Ingest and Video Ingest show the timestamps of the first frames of the audio and video streams received by the source filter. This information can also be found in the GVEncode logs. Look for entries beginning with First received audio timestamp and First received video timestamp. Those logs will also let you compare the frame timestamp with the local GVEncode time at which these frames were received.

The above timestamps can be contrasted with the updated Audio Time and Video Time fields. These fields show the timestamp of the last frame of each stream received by the source filter. If these entries cease to be updated, it is most likely because the filter is no longer receiving input.

Finally, to allow you to evaluate synchronization between audio and video, the A/V Delta field shows the timing difference, in milliseconds, between the last audio frame and the last video frame received. Normally, the difference shouldn’t exceed the duration of one frame. If it does, the stream quality can be impacted and the streams can be desynchronized.

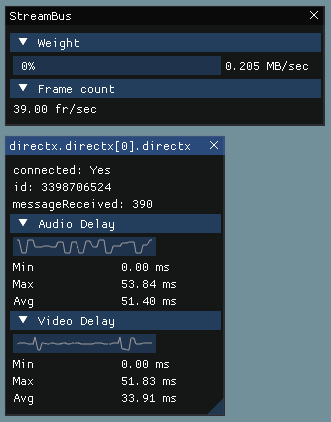

Composition

The composition service interface exposes information about its stream bus such as the fps, as well as feedback about the sources connected to its frontend.

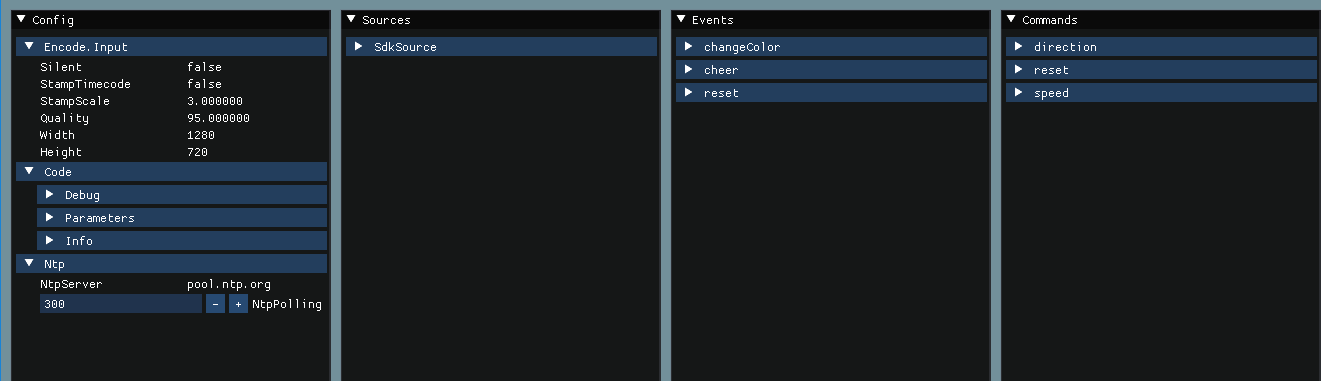

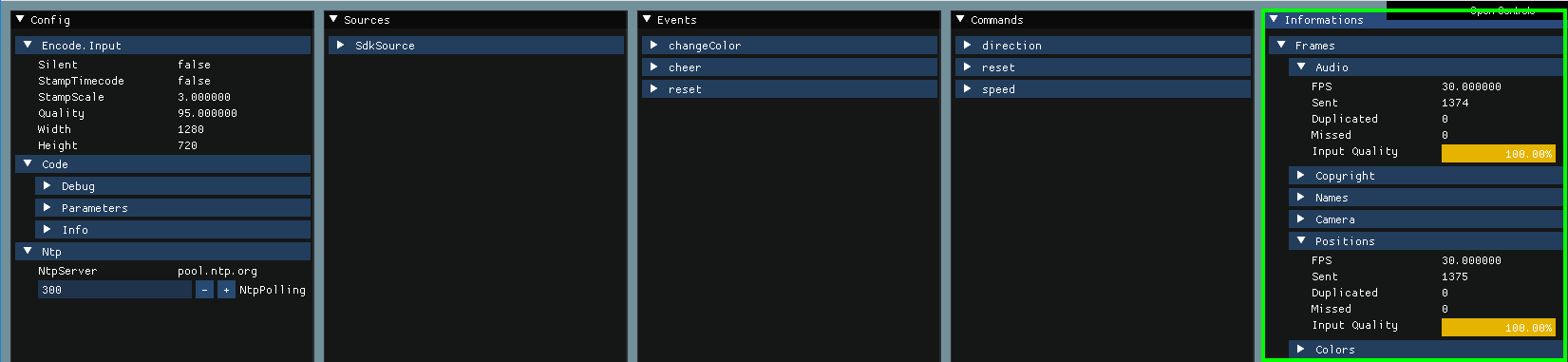

SDK

As of now, the SDK integration is focused on providing configuration information. You can find standard info there about the different streams (ID, framerate, granularity, etc.). A debug console is supplied with settings you can toggle. There are also sections for events and commands, although they are currently not active.

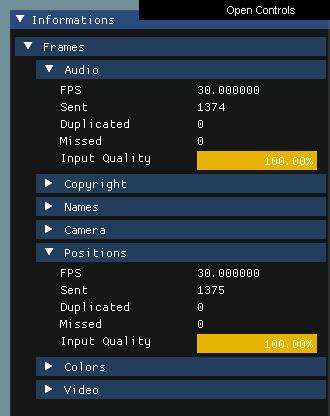

The information tab is found at the far right of the SDK tabs.

It provides information on the streams as they are sent by the SDK.

name |

description |

|---|---|

FPS |

The number of frames per seconds. [1] |

Sent |

The number of frames that were sent. |

Duplicated |

The number of extra frames that were sent to keep the expected framerate. |

Missed |

The number of frames that were sent as missing due to lack of available data. |

Input quality |

An indication of how well the data is submitted to the SDK. [2] |

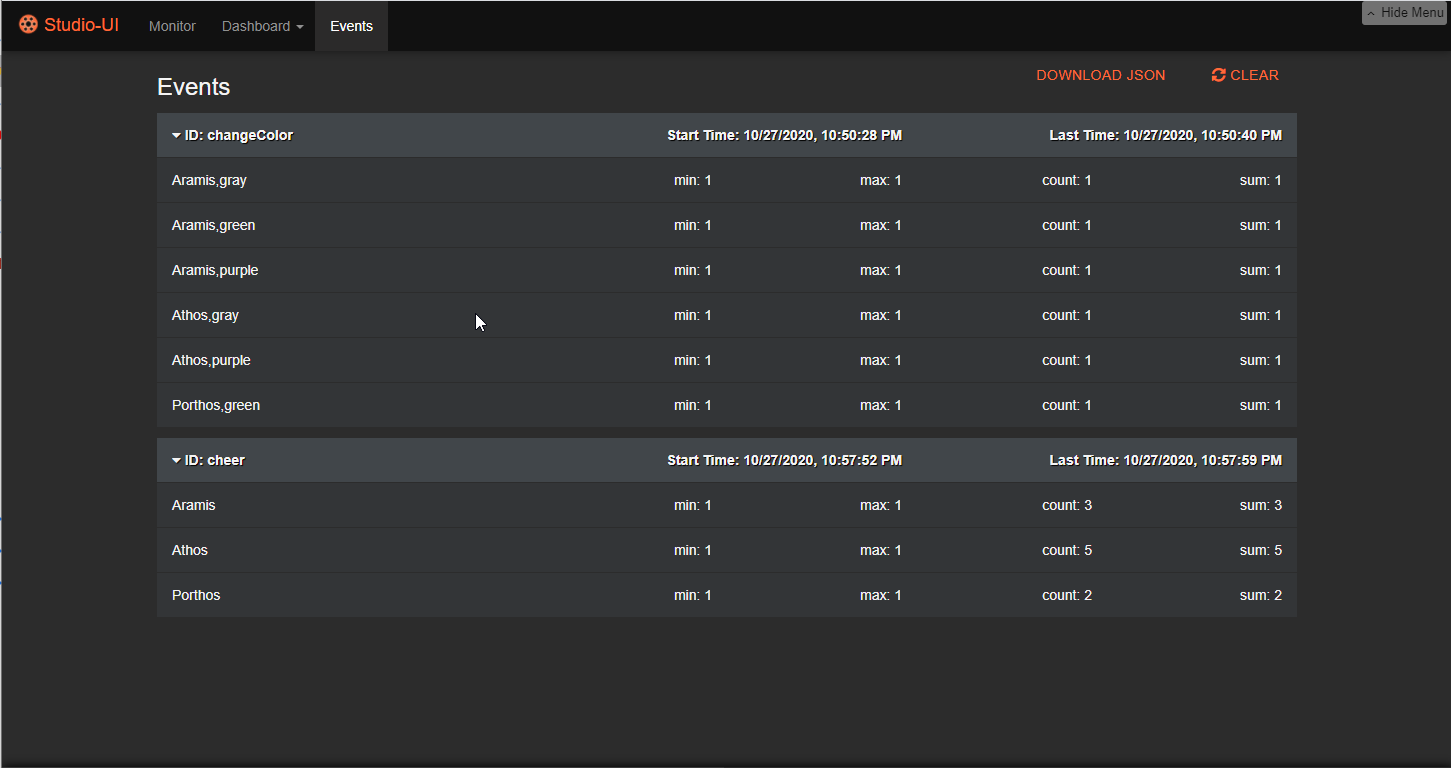

Events

The Events component is where you can view summaries of each event’s results. Each collapsed row contains the grouped results for that event. These rows display identifying information for each event:

ID: The name of the event.

Start Time: The time the event was triggered.

Last Time: The time the event received its last input.

Click a row to expand it and see the results of that event. Each row in the expanded group has a key on the left and four values on the right. The key is an array of strings defined in the event. The four values are numbers calculated continuously during the broadcast session:

min: The minimum value among all inputs.

max: The maximum value among all inputs.

count: The number of times the key was encountered.

sum: The total of all input values added together.

Click Download to download the current summaries in JSON format. Click CLEAR to erase the summaries of all the events listed on the page.

Warning

Once you clear the event summaries, the data can’t be restored or downloaded.

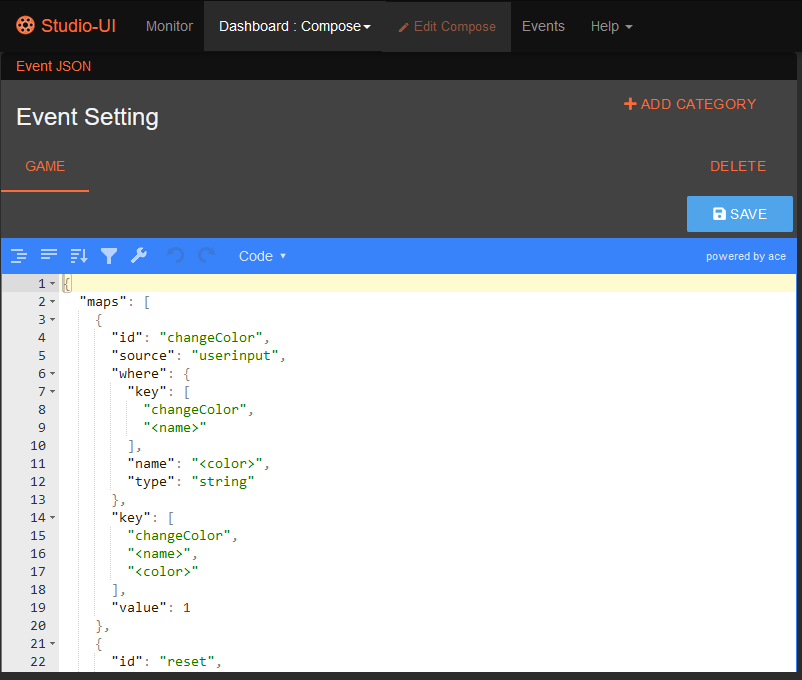

If you wish to edit the events definitions and/or their categories, edit the dashboard to add the Event JSON component.

See also

Events Overview for the details of how events encapsulate communication between the game process and spectators.

Events API for a guide on different types of events data you can retrieve.