Using Clusters¶

Set Up a Bastion Server¶

To create a cluster, you first need a Genvid bastion server running. You can set up and manage a bastion server using the genvid-bastion script. The following command will set up one with the minimal services required.

From your shell, run:

genvid-bastion install --bastionid mybastion --checkmodules --update-global-tfvars --loadconfig

--bastionid mybastion- A unique identifier for your bastion. It must:

- Be between 3 and 64 characters.

- Only contain lowercase letters, numbers, or hyphens.

- Start with a letter.

--checkmodules- Use this option to install new modules if none exist or update the ones already present.

--update-global-tfvars- Use this option to update the global Terraform variables.

--loadconfig- Use this option to load the jobs and logs.

Important

Due to limitations with the Windows file system directory length, system username and Bastion ID also factor into the max possible cluster name length. We’ve set a reasonably safe name length limit, but it’s technically possible for this limit to still cause issues if the user has an especially long username and/or Bastion ID.

Configuration¶

The bastion is configured through a series of files available under

ROOTDIR/bastion-services/.

ROOTDIR/bastion-services/init

This folder contains files loaded before launching the

bastionjob. The content is added to the key-value store, where each object describes a folder and each value is converted to a string and inserted as a key.The folder location can be changed using

GENVID_BASTION_INIT_FOLDER. For more information, seesetup_jobs().

The next step is to open a Bastion-UI website to manage the Clusters.

genvid-bastion monitor

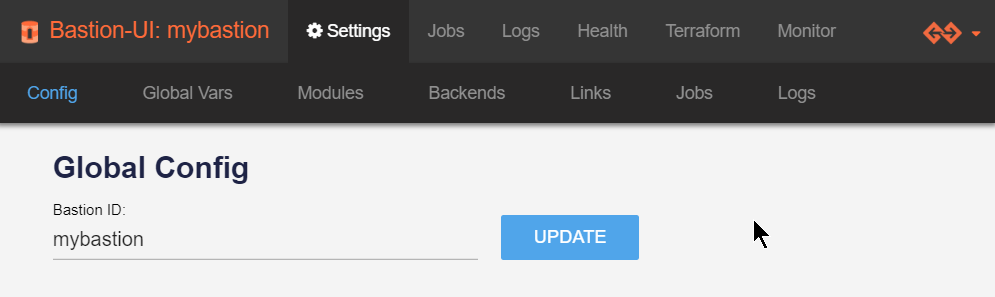

On the Bastion-UI page, you can customize the Bastion name.

- Modify Bastion name.

- Click on Update

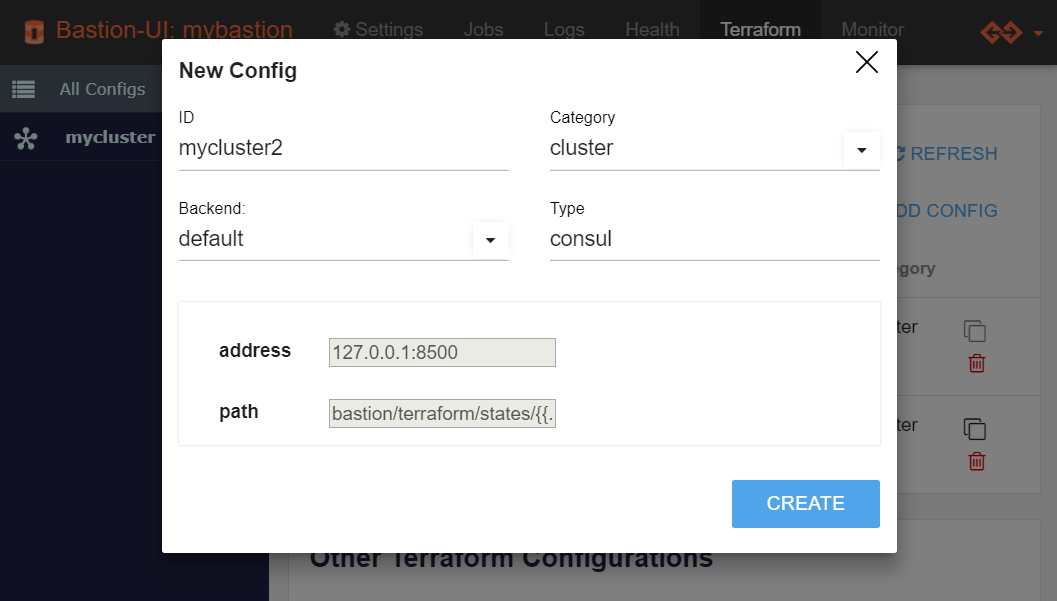

On the Terraform page:

- Click

Add Config.- Enter a unique

ID.- Choose

clusteras the category.

Note

We are limiting the length of cluster id to 64 characters due to technical limitation.

You can select another backend if needed. For now, you can stick with

the default values. Some backends may require configuring variables.

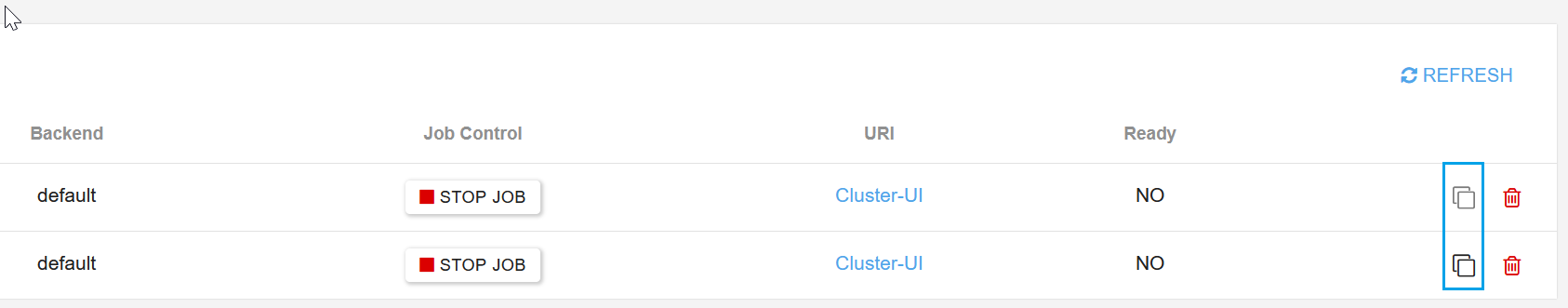

Cloning a Cluster¶

It is a good practice to use the cloning function when you want to create different clusters with similar configurations. You can do this using the Clone button on the Terraform configuration view.

Cluster Statuses¶

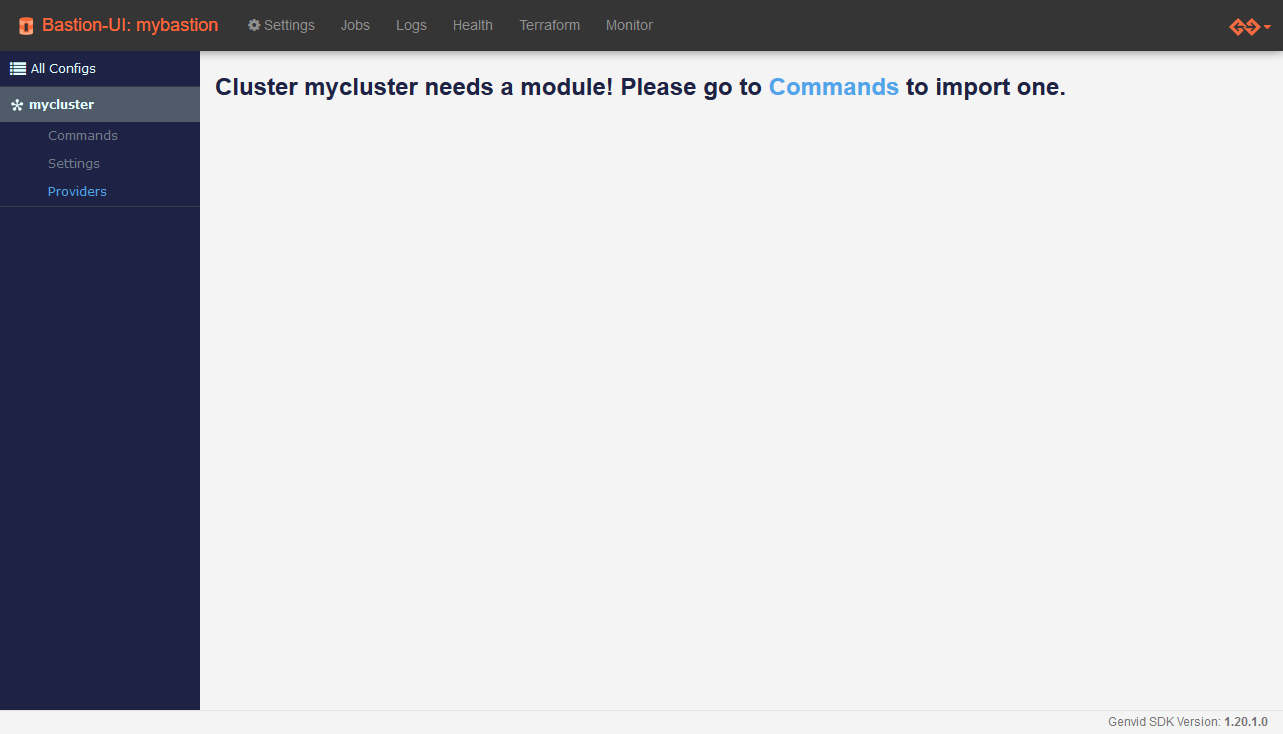

After creating a cluster, its status is EMPTY. This means that the cluster needs a module. The cluster statuses are:

- VOID: The cluster doesn’t exist.

- EMPTY: The cluster exists but has no modules.

- DOWN: The cluster is initialized but resources are empty.

- UP: The Terraform

applyprocedure succeeded. - BUSY: A command is currently running.

- ERROR: An error occured when checking the cluster.

- INVALID: The cluster is reporting an invalid or unknown status.

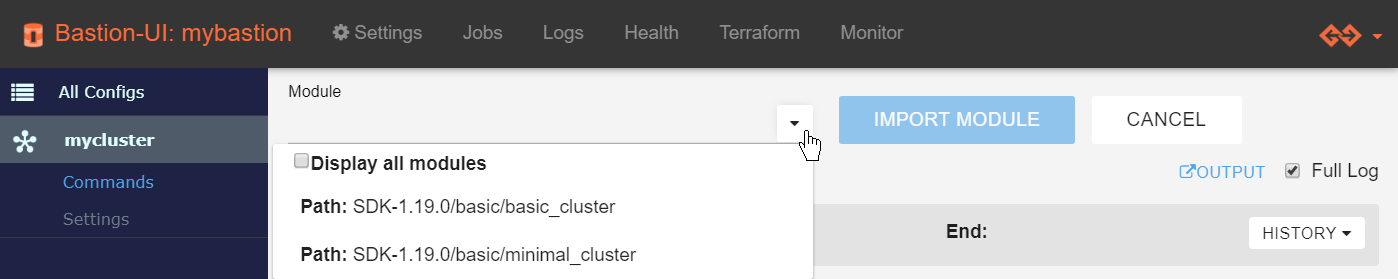

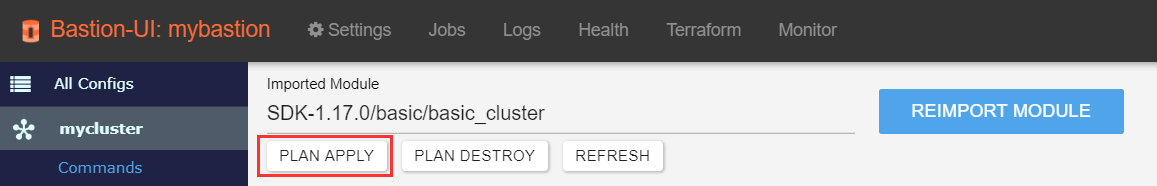

Importing a Terraform Module¶

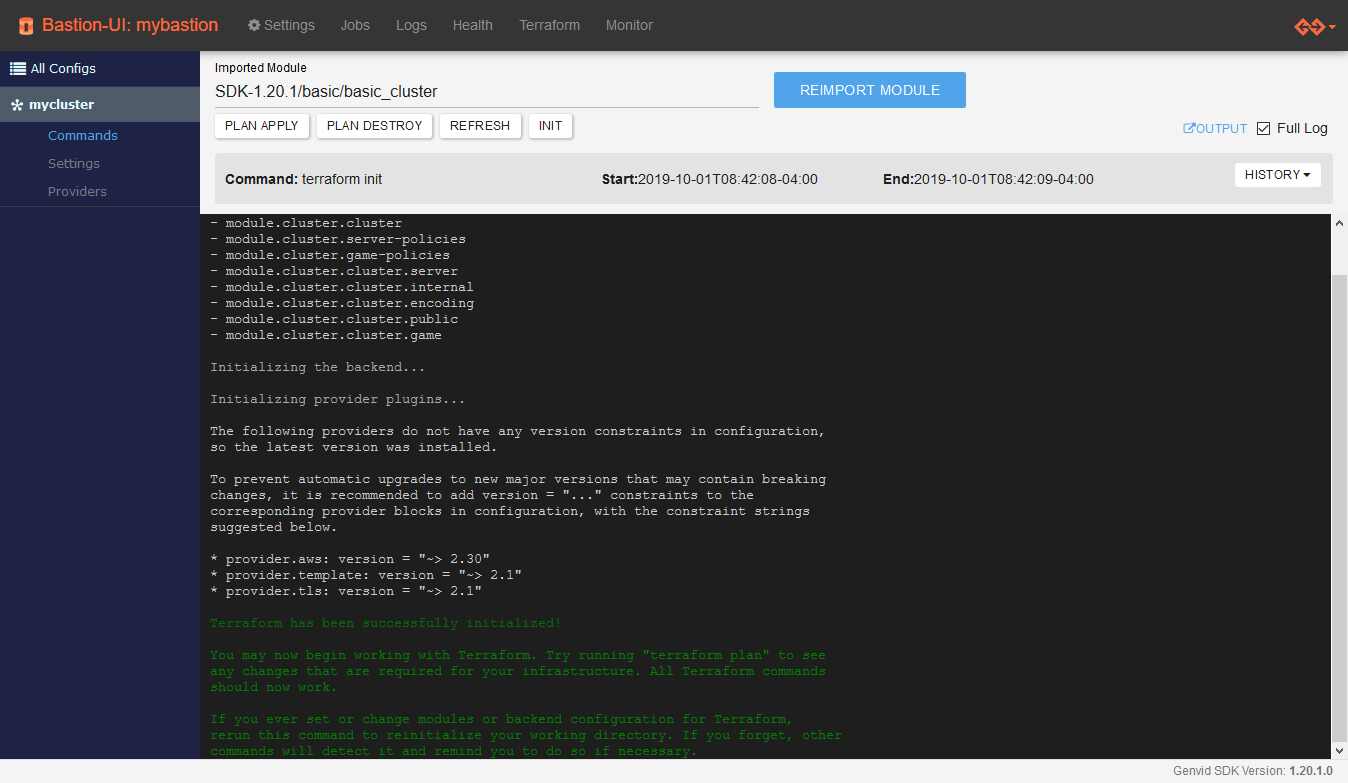

Before configuring the cluster, you must initialize it with a module. This copies the module template and downloads any modules and plugins it requires.

- Click Commands.

- Select the SDK-{version}/basic/basic_cluster module.

- Click Import module.

You will see an initialization log appear. This step should last only a few seconds.

Tags¶

All resources created for a cluster have the following tags to identify them:

genvid:cluster-name: The name of the cluster. genvid:creator-service-id: The Bastion ID, when created through the Bastion UI.

Important

Terraform Settings¶

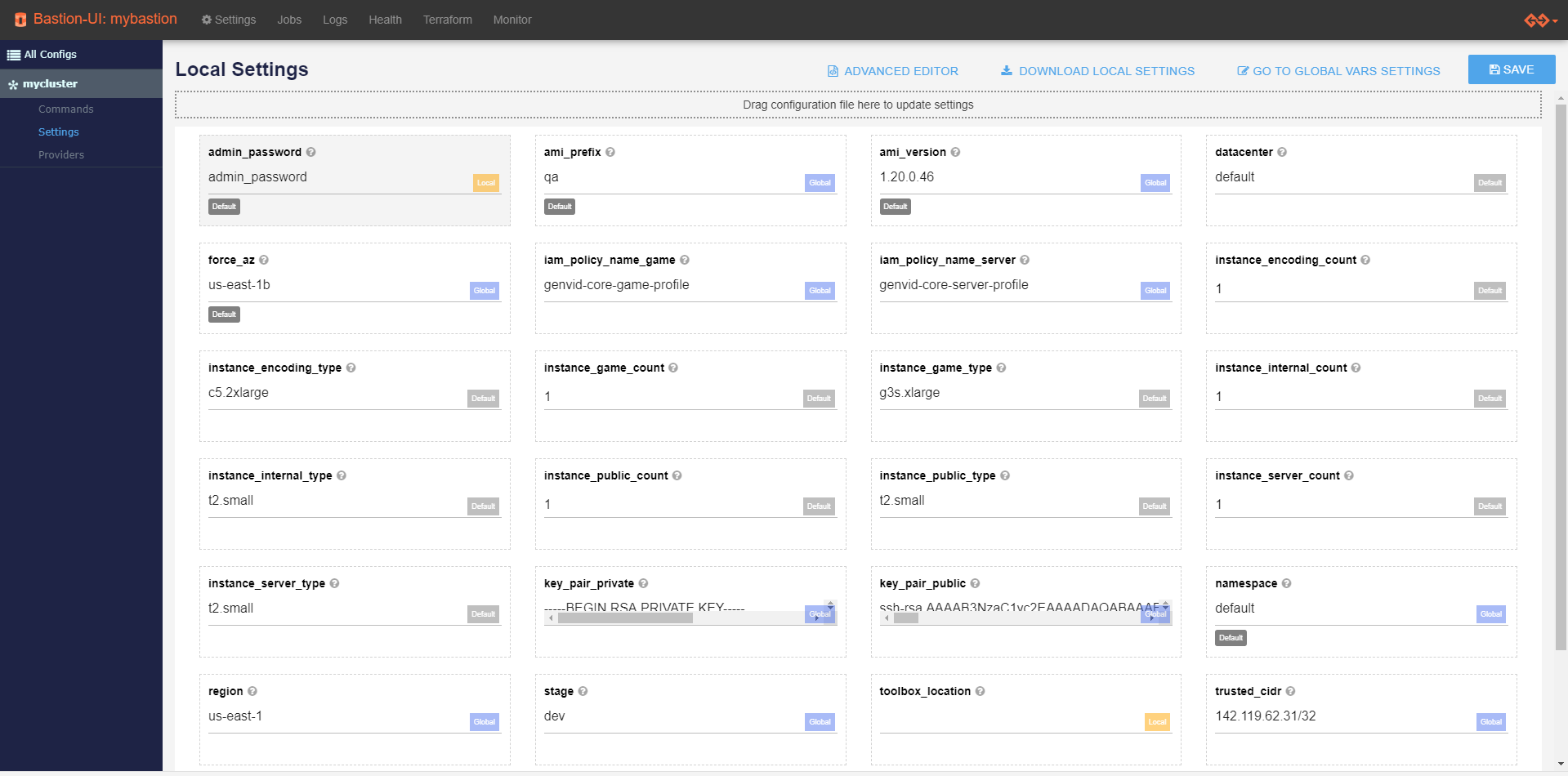

We use Terraform to build the cluster insfrastructure, so the setting values configure the cluster infrastructure. Click the Settings sub link for your cluster and edit the settings.

Note

- See the Tick Setup page for Tick-specific settings.

For ease of use you can also download the settings on the page to a JSON file. You can drag and drop the edited file on the form to edit multiple settings at once or revert to a previous configuration. Please note that all unsaved configurations will be lost.

Details for cluster variables can be found here: Terraform Modules.

If required, you can have a cluster that allows you to have load balancing

and SSL enabled. The module is named basic_cluster_alb_ssl. In addition to the

variables of the basic_cluster, the following additional variables are

available:

The next 2 settings’ values are provided automatically to the Terraform configuration via environment variables. You don’t need to set them manually.

clusteris the name of your cluster.bastionidis the name of your bastion.

The basic_cluster and basic_cluster_alb_ssl configurations take care of

everything: creating a new VPC, key pair, etc. If you want more control or your

permissions don’t let you create an AWS VPC or Roles, use the

minimal_cluster or minimal_cluster_alb_ssl configurations. These

configurations let you provide those elements directly.

Like the basic_cluster_alb_ssl configuration does for basic clusters, the

minimal_cluster_alb_ssl configuration adds ALB/SSL support to minimal

clusters.

- The subnets selection is done following this order. The first that applies will be used:

1- If var.subnet_ids is provided use it

2- If var.subnet_ids is not provided and var.azs is provided, then use it to find the related subnet_ids and use them to deploy the servers and assign the ALB to existing subnets in each availability zones.

In either scenario, providing the vpc_id is mandatory. Note that ALB needs at least two public subnets in at least two availability zones. Thus, you should provide either at least two AZs or at least two subnet_ids. In case you left both variables empty, just make sure that the provided VPC has at least two availability zones and in each availability zone, you have one (and just one) subnet which should be public.

See the Terraform Configuration Documentation for more information on how to set up these variables globally or locally.

Important

The list subnet_ids is an ordered list and its order has an impact on where the servers are deployed. It uses modulo function to assign servers to the subnet_ids list.

The servers’ deployement is done within the subnet list (subnet_ids) using the round robin algorithm. As an example: for subnet_ids = [“SN1”, “SN2”, “SN3”], instance_encoding_count = “2” and instance_internal_count = “5”, the servers will be deployed following this schema:

- instance_encoding-1: “SN1”

- instance_encoding-2: “SN2”

- instance_internal-1: “SN1”

- instance_internal-2: “SN2”

- instance_internal-3: “SN3”

- instance_internal-4: “SN1”

- instance_internal-5: “SN2”

Since the order of the list “subnet_ids” matters for servers’ assignment, if the subnet SN2 is deleted from the above list, Terraform will recreate the servers in the remaining subnets “SN1” and “SN3”, the new distribution schema will be:

- instance_encoding-1: “SN1”

- instance_encoding-2: “SN3”

- instance_internal-1: “SN1”

- instance_internal-2: “SN3”

- instance_internal-3: “SN1”

- instance_internal-4: “SN3”

- instance_internal-5: “SN1”

To limit the deletion of servers to those belonging to the subnet you removed only, you must replace it, in subnet_ids, with another subnet. This will keep the same distribution for the remaining servers in their original subnets. Continuing with the same example above, the new ‘ordered’ subnet_ids will be subnet_ids = [“SN1”, “SN1”, “SN3”]. The second subnet in the list can be any subnet you have. The distribution schema will be:

- instance_encoding-1: “SN1”

- instance_encoding-2: “SN1”

- instance_internal-1: “SN1”

- instance_internal-2: “SN1”

- instance_internal-3: “SN3”

- instance_internal-4: “SN1”

- instance_internal-5: “SN1”

If you want a better distribution of the server. You can use the output “subnet_ids” that will be like this: [‘SN1’, ‘SN2’, ‘SN3’] and change it too: [‘SN1’, ‘SN1’, ‘SN3’, ‘SN1’, ‘SN3’, ‘SN3’]. The modulo will do the magic.

Terraform Providers¶

Providers are specific to a cluster and, more specifically, to the module imported in that cluster. Thus, let us start by creating a new cluster and importing some module on it.

Fig. 6 On the first import, you will see the provider plugins being downloaded. It is a great way to know which providers are used by a specific module.

Every provider has its own set of arguments it recognizes. The best way to know how a specific provider can be customized is by visiting the Terraform Providers page and looking up the specific providers you are interested in.

Customizing Terraform Providers¶

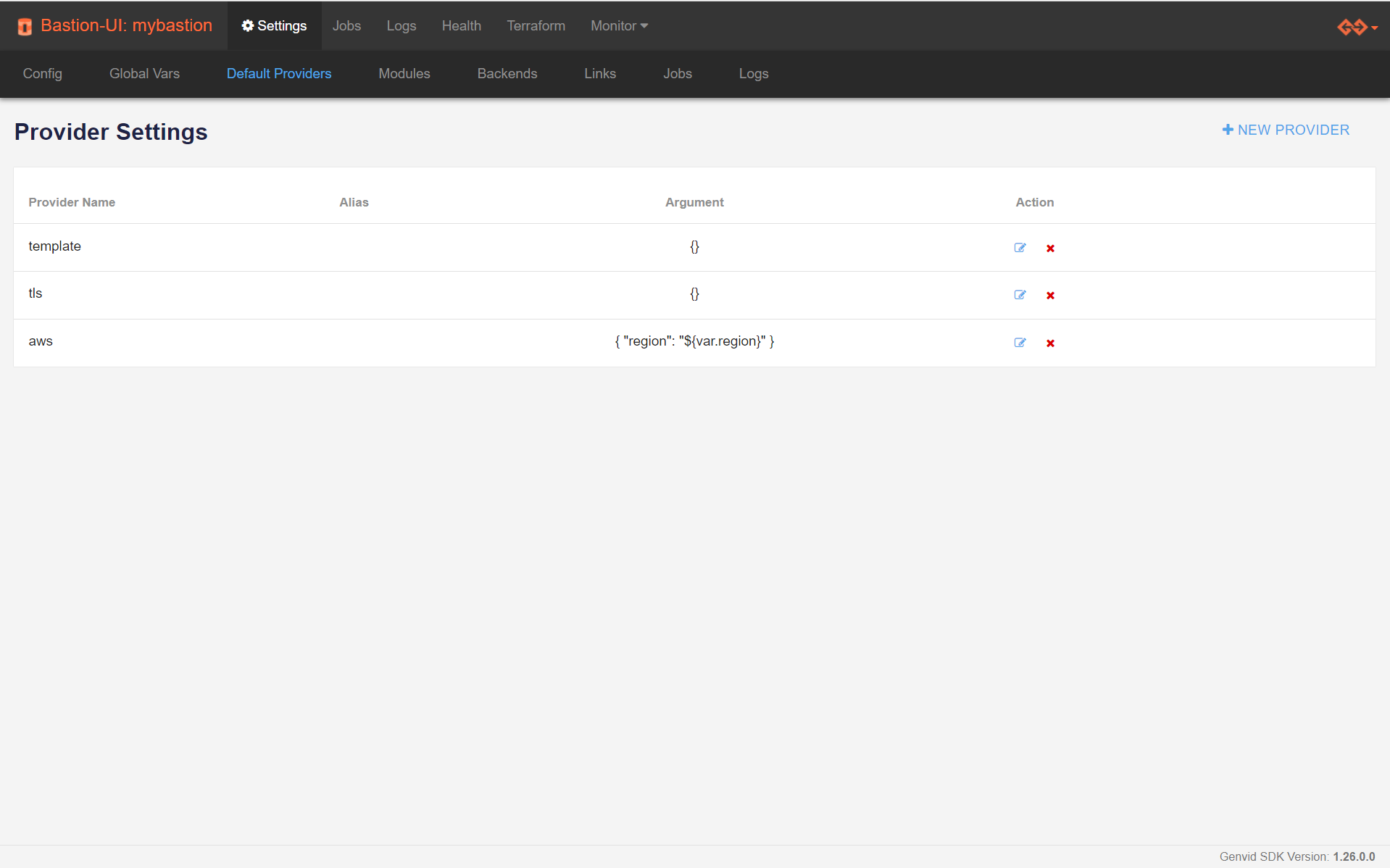

(Global) Default Configuration¶

There are two levels at which providers configuration can be customized: at the global (bastion) level or on the cluster directly after importing a module. The global configuration is meant to give a default configuration to new clusters (or on a reimport of the module).

During the installation of the bastion, we set up such a configuration so

that your clusters work out of the box with any of the modules we provide.

The information is loaded from a file stored at

bastion-services/terraform/providers/default.json.

When you import a module into a cluster, only the default configurations for providers that this module uses are applied. So it’s safe to add configurations for providers that aren’t used by every module.

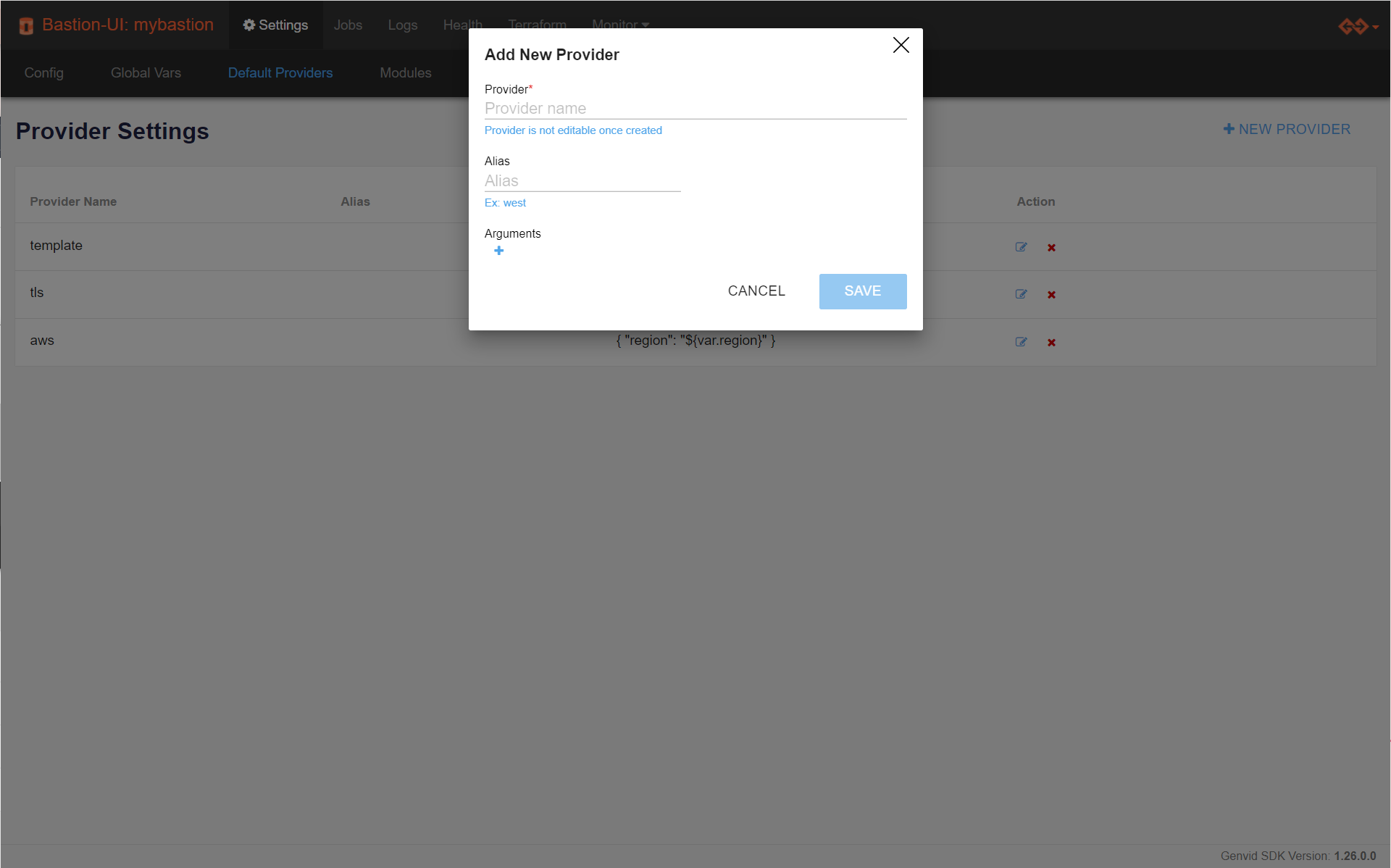

To add a new default configuration for a provider:

Open the Bastion UI page.

Click Settings.

Click Providers.

Click + NEW PROVIDER.

Enter a unique name for Provider and Alias to identify the provider you want to customize.

Important

The identifiers you use for Provider and Alias are checked when you import a module into a cluster. If they don’t match a provider used by that module, the default configuration won’t be applied.

If you have one or more arguments you need to set for a provider, click + to add each.

Click SAVE to save the provider configuration.

Per-Cluster Configuration¶

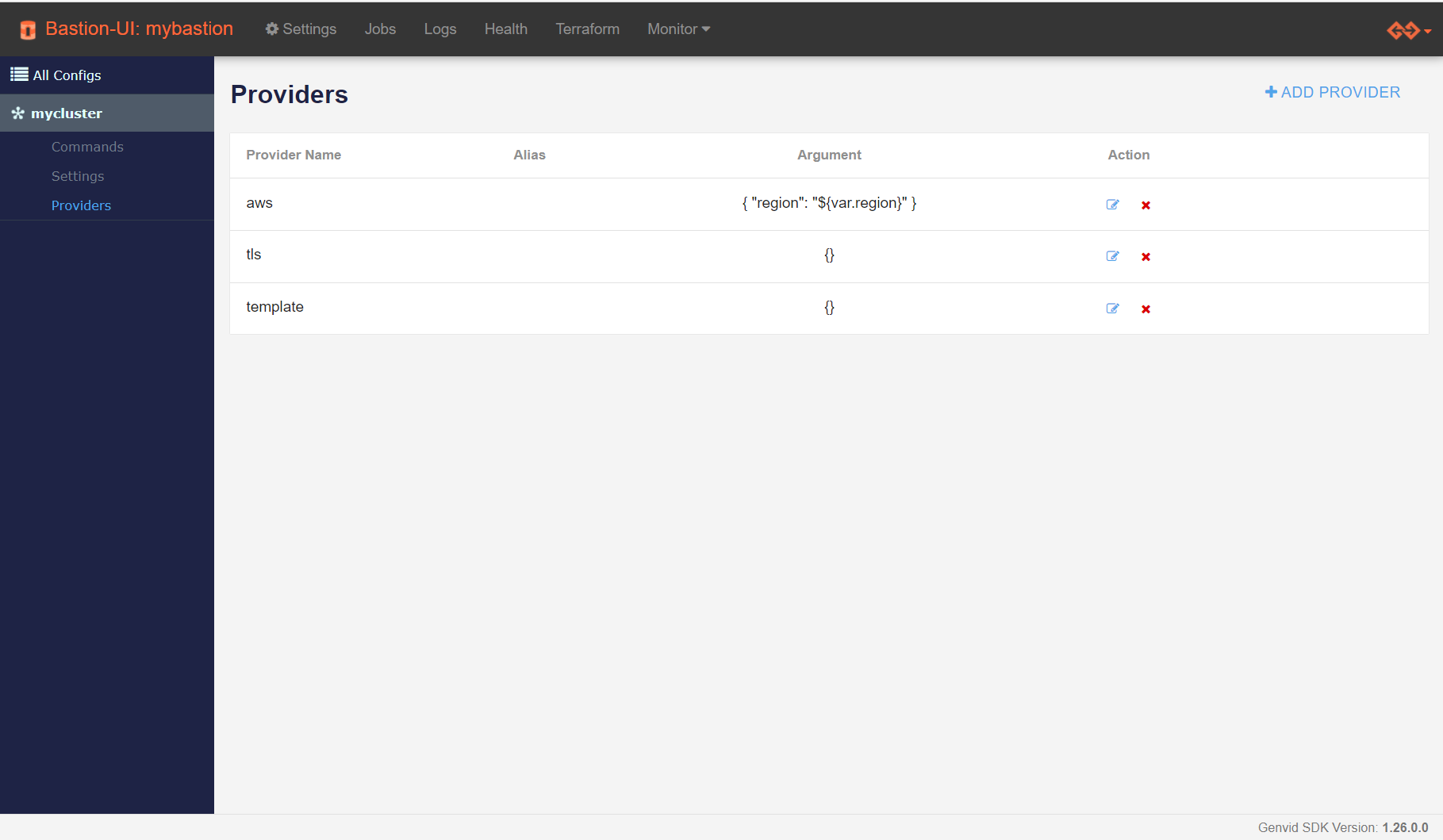

After importing a module on your cluster, you can go to the Provider tab (under Terraform) to see its current configuration.

Fig. 7 Like for the Settings tab, if you did not import a module yet, you will be invited to do so by going to the Commands page.

By default, the configuration should be empty, unless some providers have a global configuration which will be automatically applied by this point. Any further change made to the cluster’s providers will only affect that cluster.

Warning

If the cluster’s module is re-imported, the global configuration will override its configuration.

Fig. 8 As you can see in this example, Terraform variable interpolation is supported to specify values for the providers’ arguments.

Once your cluster is created and you know which providers you want to customize, you may go to Terraform, from the top menu, and then Provider under the cluster’s name from the left menu.

The process to customize providers is very similar to the global case. The + ADD PROVIDER button is used to add a configuration for a provider not yet listed. Otherwise, the edit button (the left Action button) can be used to customize existing configurations.

Warning

The changes to the providers configuration might not take effect until a Refresh command is issued.

Terraform Providers Arguments¶

You can give aliases to different provider configurations. The Terraform init

phase considers the configurations, then the plan and apply phases use the

arguments. Arguments vary between providers.

Note

If you notice some arguments are ignored at the plan phase, try to

issue a Refresh to update Terraform’s state with the new

configuration.

Provider aliases might not be useful unless you write your own Terraform module. More information can be found on Terraform Provider Configuration page.

Terraform Provider Versions¶

Important

Starting with Genvid MILE SDK version 1.28.0, you will no longer be able to directly change a provider version from the Bastion UI.

See Terraform Provider Requirements for a guide on specifying provider versions in your custom modules.

If you wish to manually modify a provider version on one of our supplied

clusters, you can find the declarations in

bastion-services/terraform/modules/basic/cluster/versions.tf.

When you change the Terraform files, refresh your modules in the Bastion UI for the changes to take effect. See Modules Section for more information.

Terraform Providers Toolbox Support¶

We offer subcommands in the Toolbox to help managing providers. The following table summarizes the commands available.

| Command | Description |

|---|---|

| genvid-bastion get-default-terraform-providers | Display the current global providers configuration in JSON. |

| genvid-bastion set-default-terraform-providers | Set the global providers configuration from a JSON file matching the format from get-default-terraform-providers. |

| genvid-bastion delete-default-terraform-providers | Delete the existing global providers configuration. |

| genvid-clusters get-terraform-providers | Display the current providers configuration of a specific cluster. |

| genvid-clusters set-terraform-providers | Set the providers configuration of a specific cluster from a JSON file matching the format from get-terraform-providers. |

| genvid-clusters delete-terraform-providers | Delete the existing providers configuration of a specific cluster. |

While the easiest way to create a new providers configuration is to use the bastion UI and then the toolbox to redirect the configuration into a file, here is an example in case you want to write it by hand:

[

{

"provider": "aws",

"alias": null,

"arguments": {

"region": "us-west-2"

}

},

{

"provider": "template",

"alias": null,

"arguments": {}

},

{

"provider": "tls",

"alias": "myalias",

"arguments": {}

}

]

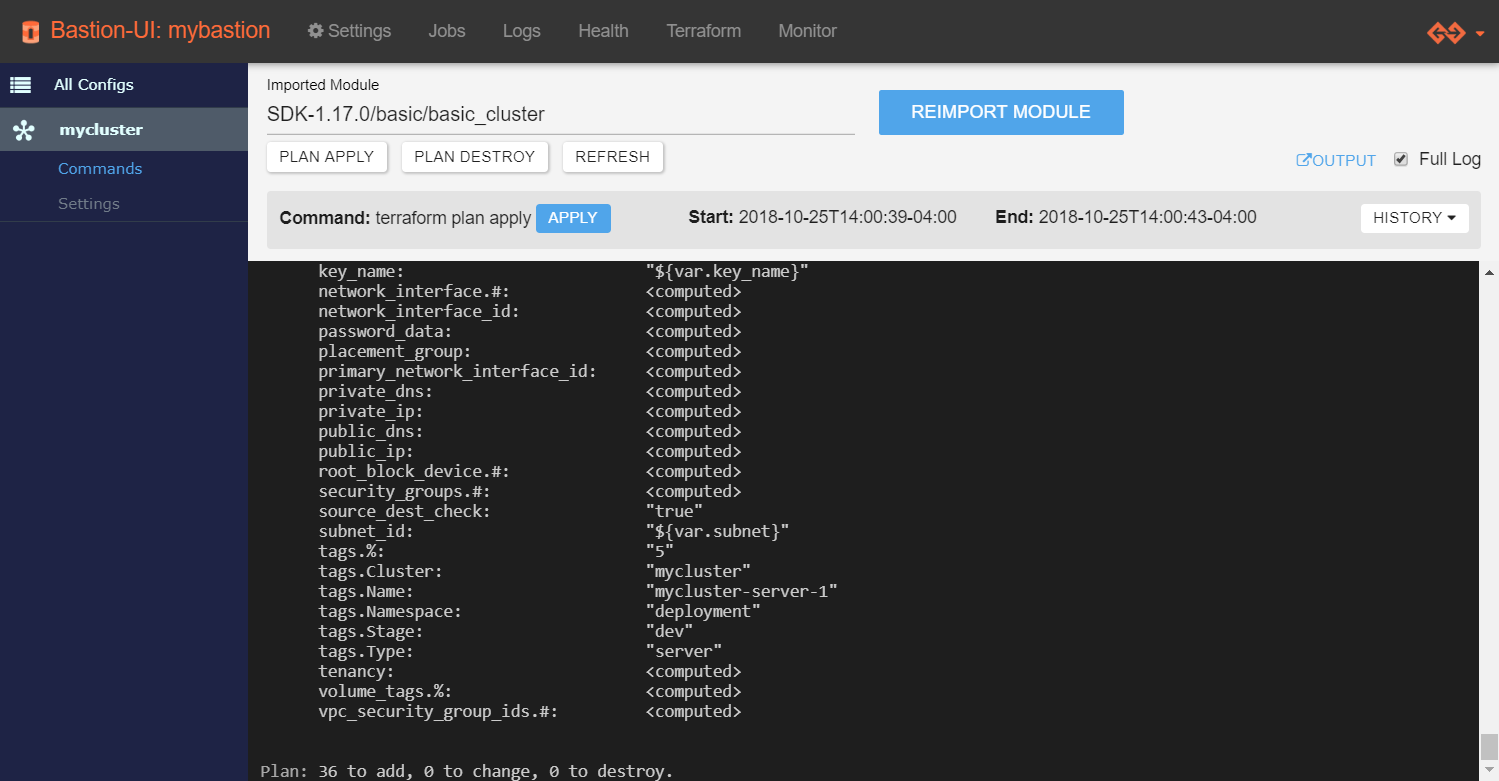

Applying a Terraform Infrastructure¶

Terraform Apply is the operation that builds the cluster infrastructure.

- Click Plan Apply to create an execution plan.

- Verify the changes match what you need.

- After verifying the changes, click Apply to execute the plan.

You should see the log starting to appear.

- If Terraform fails to build the infrastructure:

- Check the error message.

- Update the settings.

- Apply again.

At the end of this step, there is a cluster running on the cloud. When you check All Configs on the Terraform page, the status is UP. This means that the infrastructure is up, but the Genvid MILE SDK is not yet present in it.

Important

Beginning in version 1.19.0, instances provision in the background after Terraform creates them. You need to wait until an instance registers as a Nomad client in the Cluster UI before using it. In particular, Windows instances may take up to 30 minutes before they are ready.

Note

The public machine IP is not the same as the one used when setting up your AMI.

To access your game machine, use the IP from game_public_ips

with the same password set during the AMI setup.

To retrieve the game_public_ips:

- Make sure the cluster is

UP. - Go to the Commands page of your cluster.

- Click the OUTPUT button.

You’ll find the game_public_ips listed in the JSON file.

Setting up the SDK on a Running Cluster¶

Once a cluster status is UP, you will need to set up the Genvid MILE SDK on it and run your project there.

Follow the SDK in the Cloud guide to do so.

Destroying a Terraform Infrastructure¶

The terraform destroy command removes all resources from the cluster

configuration. You should only destroy a cluster’s Terraform infrastructure

when you no longer need to run your project on that cluster. To destroy the

Terraform infrastructure, click Plan destroy.

If you’re sure you want to destroy the current Terraform infrastructure, click the Destroy button to confirm.

See Destroy Infrastructure for more information.

Deleting a Cluster¶

To delete a cluster, you have to destroy the Terraform infrastructure first. Go to All Configs and click the Delete button.

Using Custom Repositories¶

You can add and remove individual Terraform repositories in the bastion. Each repository contains one or more modules that can be used to instantiate a cluster. Use the following command to list the current repositories:

genvid-clusters module-list

Use the following command to add a new module:

genvid-clusters module-add -u {URL} {name}

{URL}can be any source compatible with go-getter, including local files.{name}is the destination folder to this repository on your bastion.

After the URL is cloned in the bastion repository, it will be available as a

source under modules/module. See Terraform’s Module Configuration

for more details on using modules.

Bastion remembers the origin of each module, so you can update them using the following command:

genvid-clusters module-update [name]

The name is optional. If you don’t provide a name, it will update all repositories.

You can remove a module using the following command:

genvid-clusters module-remove {name}

See also

- genvid-clusters

- Genvid Cluster-Management script documentation.

- Bastion API for Terraform

- Bastion API for Terraform.

- Terraform’s Module Configuration

- Documentation of Modules on Terraform.

- go-getter

- A library for fetching URL in Go.